Google Cloud Platform (GCP) has emerged as a powerhouse for data engineering, offering a suite of tools tailor-made for handling big data and analytics. For data engineers aiming to stay ahead in 2025, mastering GCP’s ecosystem is becoming essential. This article explores why GCP is a key platform for data engineering, the essential Google Cloud skills for 2025, and the core GCP services every data engineer should know – including BigQuery, Dataflow, Cloud Storage, Pub/Sub, and Cloud Composer. We’ll also discuss the evolving role of data engineers (what’s expected in 2025), provide a roadmap of skills, actionable tips to advance your career, and a FAQ section. Whether you’re transitioning into data engineering or looking to upskill, this guide will help you focus your learning. (Along the way, we’ll highlight how Refonte Learning’s GCP courses and internships can provide industry-aligned training, helping you gain practical experience with cloud data pipelines and analytics.)

GCP’s Rising Importance in Data Engineering

Google Cloud Platform has rapidly gained traction in the data world, thanks to Google’s expertise in big data and distributed computing. GCP’s data services are built on the same technologies Google uses internally (for example, BigQuery’s engine evolved from Google’s Dremel system). As companies handle ever-larger datasets and real-time data streams, GCP provides scalable, fully-managed tools that simplify these challenges. Data engineers in 2025 are expected to be cloud-savvy, and many organizations are adopting or migrating to GCP for its strength in analytics and AI integration.

Why GCP for data engineering? A few reasons stand out:

Innovation and Integration: GCP offers cutting-edge services like BigQuery (one of the most advanced data warehouses) and seamlessly integrates with AI/ML tools (TensorFlow, Vertex AI). Data engineers can easily incorporate machine learning or advanced analytics into pipelines.

Fully Managed Services: GCP’s philosophy leans towards serverless and managed solutions. This means less time managing infrastructure and more time focusing on data. For example, you don’t need to manage Hadoop clusters for streaming data – you can use Dataflow and Pub/Sub which are managed for you.

Global Infrastructure: Google’s global network and data centers ensure services are fast and can handle data across regions. For multinational companies or any data engineering work that requires low latency and high throughput, GCP’s network is a plus.

Open Source and Portability: Many GCP data services are based on open-source standards (e.g., Dataflow uses Apache Beam, Composer is managed Apache Airflow). This makes skills more portable – if you learn Dataflow, you’re essentially learning Apache Beam pipelines which can run on other platforms too.

In 2025, data engineers are expected to be fluent in at least one cloud platform, and GCP is often a top choice. It’s not only about using the cloud for storage or VMs – it’s about leveraging cloud-native data warehouses, streaming processors, and pipeline orchestration tools to build sophisticated cloud data pipelines. GCP’s stack is considered one of the most coherent and powerful for this purpose, which is why many modern data engineering teams are “Google Cloud-first” for new projects.

(Refonte Learning has recognized this trend, offering a dedicated Data Engineering program with a focus on GCP. Through their virtual internships, learners use GCP tools on real projects – giving them practical skills that employers in 2025 are looking for.)

Core GCP Services Every Data Engineer Should Know

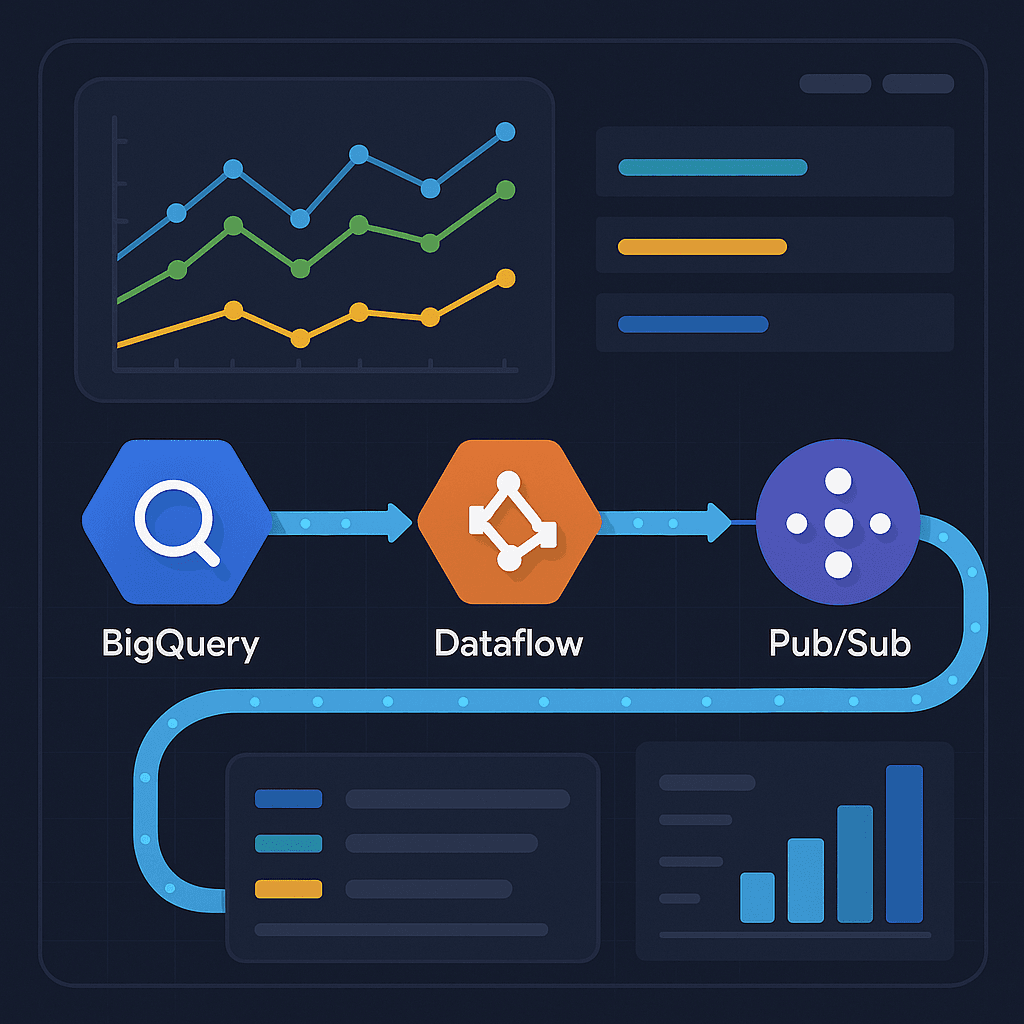

Google Cloud provides a rich set of services, but five stand out as particularly important for data engineers: BigQuery, Dataflow, Cloud Storage, Pub/Sub, and Cloud Composer. Let’s break down each of these and why they’re essential:

BigQuery – Serverless Data Warehouse: BigQuery is GCP’s flagship analytics database. It’s a fully-managed, serverless data warehouse that can run super-fast SQL queries on petabytes of data. For a data engineer, BigQuery is invaluable for data warehousing and analytics. You can load huge datasets into BigQuery and perform analytics without worrying about indexing or scaling – Google handles it. BigQuery is SQL-based, so if you know SQL, you can start analyzing data immediately. It also has built-in machine learning (BigQuery ML) and supports user-defined functions in languages like JavaScript and Python. In 2025, companies expect data engineers to design and manage data warehouses that support BI and ML workloads; BigQuery’s popularity means you should be comfortable with creating tables, designing schemas, writing complex SQL (window functions, joins, etc.), and optimizing queries for cost and performance. One key skill is understanding how to structure data (partitioning, clustering) in BigQuery to balance query speed and cost. Because BigQuery separates storage and compute, it can scale practically without limit – making it ideal for the era of “big data”. Learning BigQuery is not just about SQL, but also about using tools like BigQuery Data Transfer Service (for ingesting data) and understanding pricing (knowing how to estimate query costs, etc.). BigQuery for data engineering is like a foundational tool – mastering it will enable you to deliver insights from massive data with ease.

Dataflow – Unified Stream and Batch Processing: Cloud Dataflow is GCP’s fully-managed service for running data processing pipelines (built on Apache Beam). Think of it as Google’s replacement for having to manage Spark or Hadoop clusters. With Dataflow, you can write a pipeline once and run it for both streaming data (continual input) and batch data (fixed datasets). For example, you might create a pipeline to read messages from Pub/Sub, transform them, and write to BigQuery – Dataflow will handle scaling and processing in near real-time. Or you might process a daily log file dump in batch mode. Dataflow is serverless for data processing, meaning it automatically provisions and adjusts resources as needed, and you pay for the processing time. It’s highly reliable and can handle massive scale. As a data engineer, knowing Dataflow means you can build sophisticated ETL/ELT jobs: merging data from multiple sources, performing aggregations, windowing (for streams), and so on. Key skills include learning the Apache Beam SDK (which can be used in Java, Python, etc.) to define pipelines, understanding windowing and triggers for streaming, and being able to monitor pipeline health. By 2025, streaming data is more prevalent (think IoT, user activity streams, real-time analytics), so being adept with Dataflow positions you to build real-time data pipelines – a highly sought-after skill. Companies want data engineers who can process data with minimal latency for instant insights. Dataflow provides exactly that capability, without needing to babysit cluster uptime. In short, Dataflow lets you create data pipelines that are scalable, unified (batch + stream), and fully managed – a trifecta for modern data engineering.

Cloud Storage – Unified Object Storage: Google Cloud Storage (GCS) is the blob storage service on GCP, equivalent to AWS S3. It is a unified object storage solution, meaning you can store any amount of unstructured data and retrieve it as needed. Why is this important for data engineers? Cloud Storage often serves as the “data lake” in GCP architectures. Raw data from various sources (logs, images, CSV exports, etc.) can be dumped into GCS buckets. From there, you can load it into BigQuery, or process it with Dataflow, or serve it to ML models. It’s durable (designed for 99.999999999% durability) and scalable. You should know how to organize data in buckets and use lifecycle rules (e.g., move older data to cheaper storage classes like Nearline or Coldline to save cost). GCS also integrates with many tools – you can mount it for Hadoop jobs, or use it directly in Pandas as a file store via the GCS API. In 2025, with data privacy and governance being key, a data engineer should also know how to secure buckets (IAM roles, encryption) and manage access. Additionally, performance considerations like using the appropriate region or multi-region for your bucket matter (for example, if your Dataflow pipeline is running in us-central1, storing data in a multi-region that includes that location can reduce latency). GCS essentially is the backbone for storing files and large data that doesn’t fit (or belong) in a database. An essential pattern is stage data in GCS, then load to BigQuery or process via Dataflow. So, being comfortable with GCS (command-line tools like gsutil, understanding consistency and object naming best practices) is a must-have skill.

Cloud Pub/Sub – Real-Time Messaging: Pub/Sub stands for Publish/Subscribe – it’s GCP’s fully-managed messaging service that enables decoupled, asynchronous data ingestion. Think of scenarios: a web application emits user click events, a fleet of IoT sensors sends readings, or a database sends a change event – these can be published as messages to a Pub/Sub topic. Downstream, subscribers (which could be Dataflow pipelines, Cloud Functions, or custom consumers) receive those messages and process them. Cloud Pub/Sub is designed for high throughput and reliability, capable of handling millions of messages per second. It decouples producers and consumers, which is a fundamental principle in modern data architecture. As a data engineer, understanding Pub/Sub is key for building event-driven systems and streaming pipelines. For instance, if you’re capturing user activity on a website, you might publish events to Pub/Sub and have a Dataflow pipeline subscribe and write those events to BigQuery in real-time. Pub/Sub ensures that messages are delivered (it has at-least-once delivery, and you can design idempotent consumers to handle potential duplicates). Skills to learn here include designing topic/subscription models, choosing between push vs pull subscription, and managing throughput (scaling is mostly automatic, but you can batch messages, etc., to optimize). Also, knowing how to use Dead Letter Queues and replay messages if needed is useful. By 2025, many data workflows are moving from batch to real-time streaming, and Pub/Sub is often the first entry point for streaming data in GCP. It plays well with other services: for example, trigger Cloud Functions on Pub/Sub events, or use Pub/Sub with Cloud Run. Mastery of Pub/Sub means you can build systems that are reactive and can handle data in motion – a hallmark of advanced data engineering. Plus, concepts you learn with Pub/Sub (asynchronous messaging, pub-sub patterns) are transferable to other technologies like Kafka. But with Pub/Sub, Google manages the infrastructure and scaling for you, so you can focus on the data logic.

Cloud Composer – Workflow Orchestration (Managed Airflow): Cloud Composer is GCP’s managed Apache Airflow service. Apache Airflow is a popular tool for defining, scheduling, and monitoring complex workflows (DAGs – Directed Acyclic Graphs of tasks). Cloud Composer takes Airflow and runs it for you in GCP (on Google Kubernetes Engine behind the scenes), so you don’t have to maintain Airflow servers. For data engineers, Composer is the go-to for orchestrating tasks: for example, a nightly pipeline that extracts data from an API, loads it into Cloud Storage, then triggers a BigQuery load, and sends a Slack alert on success. Each of those steps can be a task in an Airflow DAG, and Composer will ensure they run in order, retry if failures, etc. Composer can orchestrate across hybrid and multi-cloud environments too, but it shines when connecting GCP services (it has built-in operators for BigQuery, Dataflow, Pub/Sub, etc.) Skills to develop: writing Airflow DAGs in Python, using Airflow operators to interact with GCP (like BigQueryOperator, DataflowOperator), and understanding Airflow concepts (tasks, dependencies, scheduling). By 2025, companies expect data engineers to not only build pipelines but also automate and schedule workflows reliably. Cron jobs might handle simple schedules, but for complex sequences and error handling, Airflow/Composer is industry-standard. Using Cloud Composer, you avoid the hassle of updating Airflow versions or dealing with the underlying infra – Google does that. You just focus on your workflows. It’s important to learn how to monitor DAG runs in Composer’s Airflow UI and debug issues (e.g., a task failing at 3 AM – where do you see the logs? how to retry?). Another tip: since Composer runs on Google Kubernetes, understanding how to size your environment (how many schedulers, etc., which is configured via Composer settings) can help optimize cost. Cloud Composer essentially allows a data engineer to coordinate various moving parts in a data platform. For instance, a weekly job might run a Dataflow pipeline, then a BigQuery query to aggregate results, then a Cloud Function to email a report – Composer ties these together. Essentially, Cloud Composer (Airflow) is your automation glue in GCP. In 2025’s data engineering landscape, being adept at workflow orchestration sets apart the senior engineers from the juniors.

These five services – BigQuery, Dataflow, Cloud Storage, Pub/Sub, Composer – form the backbone of many GCP data solutions. Here’s a quick illustration of how they might all work together: Suppose you’re building a real-time analytics pipeline for an e-commerce site. User clickstreams go into Pub/Sub, a Dataflow pipeline reads from Pub/Sub and does transformations, then loads data into BigQuery for analysis, Cloud Storage holds raw logs or backup data, and Cloud Composer schedules batch jobs (like a daily summary or a machine learning model retraining that uses BigQuery data). Each piece is crucial, and together they enable a robust, scalable pipeline.

(At Refonte Learning, the GCP-focused courses ensure you get hands-on practice with each of these services. For example, you might implement a mini-project where you use Pub/Sub + Dataflow to stream data into BigQuery, and then use Composer to orchestrate a daily BigQuery export to Cloud Storage. This integrated experience cements how the tools function in concert.)

The Data Engineer’s Role in 2025 and Skill Roadmap

The role of a data engineer has been expanding. By 2025, a data engineer is not just an ETL developer or a pipeline builder; they are a critical partner in enabling data-driven decisions, maintaining data quality, and often working closely with data scientists and analysts. Here’s how the role is shaping and a suggested roadmap to build the Google Cloud skills for 2025:

Evolving Role of Data Engineers:

More Automation & AI: Data engineers are expected to automate data pipelines and also integrate AI/ML components. This might mean deploying machine learning models (built by data scientists) into production data pipelines or using AI to optimize data workflows. GCP’s ecosystem (BigQuery ML, AutoML, etc.) makes this easier, but data engineers need familiarity with these tools.

Streaming and Real-Time Data: The days of only doing daily batch jobs are ending. Businesses want insights in real-time. Data engineers must handle streaming data, real-time analytics, and ensure systems can deliver data with minimal lag. GCP’s Pub/Sub and Dataflow are a direct response to this need.

DataOps and DevOps Mentality: There’s more emphasis on reliability, testing, and deployment in data engineering. Treating data pipelines as production code, using CI/CD, monitoring data quality – these are important. On GCP, this could involve using tools like Cloud Build for pipeline deployments or monitoring pipelines with Stackdriver (Cloud Monitoring).

Collaboration and Business Understanding: Data engineers in 2025 work closely with other teams. Understanding the business context (what the data is for) helps in designing better data models. They might also ensure compliance (like GDPR) by implementing data governance solutions.

Skills Ladder / Roadmap: (for someone aiming to become a proficient GCP data engineer)

Foundational Skills (Step 1): Start with core programming and database knowledge. Ensure you’re comfortable with Python (or Java/Scala if you prefer – but Python is often favored in GCP due to its presence in AI and Beam). Master SQL thoroughly, since you’ll use it with BigQuery. Learn the basics of cloud computing (what is a VM, what is a managed service, etc.). Gain a high-level understanding of all GCP’s data services (even beyond the big five, know that there’s Dataproc for Hadoop/Spark, Bigtable for NoSQL, etc., even if you don’t use them initially). At this stage, perhaps get the Google Cloud Certified Associate (Cloud Engineer) certification or the Associate Data Engineer learning path – it covers core services and general cloud usage.

Data Ingestion and Storage (Step 2): Learn how to ingest data into GCP. This involves Cloud Storage (uploading data, organizing buckets) and Pub/Sub (publishing/subscribing messages). Practice with a scenario like: take a CSV, put it in Cloud Storage, then load it into BigQuery; or send a JSON message to Pub/Sub and catch it with a Cloud Function that writes to Firestore. This builds your understanding of how data enters the system. Also, start learning about data formats (CSV vs JSON vs Avro vs Parquet) and why format matters for BigQuery or Dataflow (e.g., Parquet is efficient for analytics). You should also become familiar with Data Fusion (a GCP ETL tool) or third-party ingestion tools if relevant, but focusing on Pub/Sub and Storage is a good start.

BigQuery Mastery (Step 3): Dedicate time to become very good at BigQuery. This means not just writing SELECT * queries, but learning how to optimize queries (understand BigQuery’s architecture – it’s a columnar store with parallel execution). Learn partitioning and clustering in BigQuery to improve performance. Try out BigQuery ML to see how a data engineer might train a quick model using SQL. Also, learn how to use BigQuery to transform data (many ETL tasks can be SQL queries in BigQuery, avoiding the need for a separate pipeline). Understand controlling costs: using preview queries, estimating bytes, etc. A good goal is to obtain the Google Cloud Professional Data Engineer certification, which has a heavy focus on designing BigQuery-based solutions. That cert will ensure you’ve covered security (like using IAM to control access to datasets), backup (BigQuery can do point-in-time recovery), and integration (BigQuery can be accessed via APIs, or connected to BI tools like Data Studio/Looker).

Pipelines with Dataflow (Step 4): Once comfortable with batch querying, move to learning Dataflow/Apache Beam for more complex transformations and streaming. Start by writing a simple batch pipeline in Dataflow (for example, read from one BigQuery table, do some transformation in Python, write to another). Then explore streaming: create a pipeline that reads from Pub/Sub and writes to BigQuery or Cloud Storage. Learning Beam’s model (PCollections, transforms, DoFns) and how Dataflow orchestrates it will take some practice. Also learn about windowing (fixed windows, sliding windows) which is essential for streaming aggregations (like “count events in last 1 minute, update every 30 seconds”). The Beam website and Google’s documentation have good examples. By mastering this, you position yourself at the cutting-edge of data engineering (stream processing). Many data engineers in 2025 are expected to manage streaming pipelines for real-time analytics, so this is a high-value skill.

Orchestration & Workflow Management (Step 5): Now tie it together with Cloud Composer (Airflow). Practice writing an Airflow DAG that runs a pipeline end-to-end. For example: first task triggers a Dataflow job (use DataflowCreateJobOperator in Airflow), second task runs a BigQuery query or export, third sends an email via an HTTP call to a Cloud Function. Run it in Cloud Composer. Learn how to schedule it (maybe a daily run), and monitor in the Airflow UI. This skill ensures you can productionize pipelines and not just run them ad-hoc. Many companies want engineers who can automate everything – showing you know Airflow/Composer is a big plus. Additionally, as part of this step, get comfortable with Cloud SDK and Terraform if possible, as infrastructure-as-code might be needed to provision resources (some orgs use Terraform to set up BigQuery datasets, IAM, etc. as part of a deployment pipeline).

Advanced Topics (Step 6): Finally, round out with advanced and emerging skills. This could include: Data governance on GCP (using tools like Data Catalog for metadata, or Configuring Column-level security in BigQuery, etc.), performance tuning (like using Bigtable or Spanner for specific low-latency use cases), and learning about cloud data pipelines design patterns (e.g., Lambda architecture, Data Mesh concepts, etc.). Also, familiarize with adjacent domains: a bit of Google Kubernetes Engine (some data workloads run on K8s with custom tools), and ML pipelines (using AI Platform Pipelines or Kubeflow on GCP, which might involve a data engineer’s support). Since 2025 is likely to see more convergence of data engineering with machine learning (think MLOps), having some knowledge of how data engineering feeds into ML (like preparing training data, feature engineering pipelines) will be valuable.

Throughout this journey, one thing remains constant: hands-on practice. Action: As you learn each service, build a mini-project. For instance, for BigQuery – take a public dataset and do some analysis; for Dataflow – create a pipeline to transform some data; for Composer – write a DAG that orchestrates a couple of dummy tasks. This solidifies your understanding. Refonte Learning’s approach to teaching aligns with this – they emphasize project-based learning, where each of these skills is applied on realistic tasks, often under mentorship which can accelerate your progress dramatically.

Actionable Tips to Boost Your GCP Data Engineering Career

Get Google Cloud Certified: Certifications can validate your skills to employers. Aim for the Professional Data Engineer certification on GCP – it covers designing data processing systems, operationalizing ML models, data security, and more. The study process will fill any knowledge gaps about GCP services and best practices. (Refonte Learning GCP courses often align with certification objectives and can prep you through practice exams and projects.)

Build a Cloud Portfolio Project: Nothing shows skill better than a real project. Design a small data pipeline on GCP and document it. For example, build a cloud data pipeline that ingests some public data (maybe Twitter streaming data or IoT sensor data) via Pub/Sub, processes it with Dataflow, stores it in BigQuery, and then visualize results in a dashboard (Data Studio or Looker Studio). This hands-on project demonstrates your ability to integrate multiple GCP services and yields something you can show in interviews or on GitHub.

Learn Infrastructure as Code (IaC): As data pipelines grow, manually configuring resources won’t scale. Get comfortable with tools like Terraform or Cloud Deployment Manager to script your GCP resources (BigQuery datasets, Pub/Sub topics, etc.). This also ties into CI/CD – consider using Cloud Build to automate deployments of Dataflow code or Airflow DAGs. Employers in 2025 value DataOps skills – treating data pipelines with the same rigor as software engineering.

Optimize and Cost Management: Practice optimizing queries and pipelines for cost. For instance, learn to use BigQuery’s cost estimator, partition tables to reduce scan sizes, and use Dataflow’s autoscaling effectively. Understanding how to choose the right VM types in Dataflow or the right storage class in GCS can save significant money at scale. Being able to discuss how you optimized a pipeline (and maybe saved X% cost) is a great talking point in job interviews. It shows you can engineer not just for performance but also efficiency.

Stay Updated with GCP Innovations: Google Cloud releases new features frequently. For example, in recent years they introduced BigQuery Omni (cross-cloud analytics), Dataflow Prime, and more. Keep an eye on the Google Cloud Blog and product release notes. Following GCP-focused communities (like r/googlecloud on Reddit or Google Cloud Community forums) can keep you informed about what’s new. Being knowledgeable about the latest tools (like Dataplex for data governance, or Dataform for data modeling in BigQuery) could give you an edge and allow you to propose modern solutions at work.

Networking and Community: Join data engineering and GCP groups – whether local meetups or online forums. Networking can lead to mentorship opportunities, job leads, or simply learning from peers. Contributing to open source projects or writing about your learning (e.g., a blog post about something cool you did in GCP) can also get you noticed. Refonte Learning often encourages learners to participate in hackathons or open-source contributions as part of their program, which can be valuable experience.

Solidify the Fundamentals: Amidst all the cloud tools, don’t neglect core data engineering fundamentals. Ensure you have strong skills in SQL (this can’t be overstated – advanced SQL skills are always in demand), understanding of databases (transaction vs analytics databases), and programming (data structures, algorithms for when you write data processing logic). GCP skills rest on these fundamentals. For example, knowing how to normalize data or design a star schema is still important when loading data into BigQuery. Keep sharpening these alongside your cloud learning.

By following these tips, you’ll not only gain technical skills but also demonstrate the professional mindset needed for a successful data engineering career. Remember, practical experience is key: try to simulate real-world scenarios in your practice (like implementing a slowly changing dimension in BigQuery, or handling late-arriving data in a streaming pipeline). The more scenarios you’ve encountered, the more confident you’ll be in job interviews and on the job.

(One more tip: consider an internship or guided project-based course. Refonte Learning offers industry-aligned GCP internships that let you work on projects mentored by experienced engineers. This can accelerate your learning and give you concrete achievements to talk about. For instance, completing a project to build a data pipeline for a mock company through Refonte will expose you to the end-to-end process and tools in a way self-study might not.)

FAQ: Advancing a Data Engineering Career with GCP

Q1: Which Google Cloud certification is best for data engineers in 2025?

A: The Google Cloud Professional Data Engineer certification is the most relevant for data engineers. It’s a professional-level cert that tests your ability to design, build, secure, and monitor data processing systems on GCP. It covers BigQuery, Dataflow, Pub/Sub, Bigtable, ML integration, and more. Having this certification can significantly boost your resume by showing employers you have a broad and in-depth knowledge of GCP’s data services. To prepare, you might first take the Associate Cloud Engineer cert (covering core infrastructure basics) as a stepping stone, then focus on data-specific services for the Professional Data Engineer exam. Additionally, Google offers a Professional Cloud DevOps Engineer cert – while not data-specific, it covers automation and monitoring skills that are very useful in data engineering roles (in 2025, the lines between data engineering and DevOps are blurring with the rise of DataOps). Refonte Learning’s GCP training path is aligned with the Professional Data Engineer certification, providing courses and practice exams to help you succeed.

Q2: Do I need to learn programming for GCP data engineering, or can I manage with just SQL and tools?

A: While SQL is extremely important (and BigQuery lets you do a lot with SQL), you will benefit from knowing programming – especially Python or Java. Many data engineering tasks involve writing scripts or pipeline code. For example, developing Dataflow pipelines uses Python (with Apache Beam) or Java. Cloud Composer uses Python (since Airflow DAGs are written in Python). Also, if you need to do data preprocessing that’s complex, you might write a Python script or Cloud Function. So, yes, you should have decent programming skills. Python tends to be the go-to language due to its ease of use and libraries (like pandas, Apache Beam, etc.). That said, GCP does have many UI-based tools (Data Fusion provides a visual ETL interface, and some simpler workflows can be done with SQL in BigQuery alone). But those who can code will find it easier to implement custom logic and automate tasks. Additionally, scripting skills help in using GCP’s SDK/CLI for automation. If you’re not confident in coding, start with Python basics and then practice by writing small data scripts (e.g., a script to call an API and dump data to Cloud Storage). Over time, integrate that with GCP services. Remember, a data engineer in 2025 is often expected to handle end-to-end data flow, which inevitably includes some coding. Refonte Learning’s data engineering program includes Python programming modules and exercises specifically geared towards data tasks, which can be very helpful if you’re coming from a SQL-only background and need to ramp up your coding.

Q3: How does BigQuery differ from traditional databases, and do I need to learn data modeling for it?

A: BigQuery is a cloud-native, serverless data warehouse, quite different from a traditional on-premise SQL database. First, BigQuery decouples storage and compute – data is stored columnar and compressed in Google’s infrastructure, and compute (query processing) is allocated on the fly when you run queries. This means it can handle massive scale without you provisioning a big server upfront. Second, BigQuery has a pay-per-query cost model (or flat-rate if you opt for that) – you’re billed by data scanned, encouraging you to optimize query patterns.

Unlike traditional OLTP databases, BigQuery is optimized for analytical queries across large datasets, not for single row lookups or transaction processing. It doesn’t enforce things like primary keys or foreign key constraints (those are often handled at the logic level if needed). That said, data modeling is still very relevant. You might denormalize data for BigQuery to optimize query performance (since joins on petabyte tables are expensive, many BigQuery schemas use nested/repeated fields or one big table design). However, normalizing data (star schema or snowflake schema) can still make sense to reduce duplication and make maintenance easier.

Many recommend a star schema for BigQuery for example – with large fact tables and smaller dimension tables – which BigQuery can handle well especially if you cluster or partition the fact table by common join keys. In 2025, data engineers should know the principles of data modeling (like when to use normalization vs denormalization, partitioning strategies, etc.) and apply them appropriately in BigQuery. So yes, invest time in learning data modeling. Tools like Dataform (now part of Google Cloud) can help manage SQL-based transformations and enforce a certain structure. But ultimately, understanding the underlying needs of your data (query patterns, update frequency, etc.) will guide your modeling decisions. In summary: BigQuery is not your typical transactional DB – it’s an analytical engine – and you should model your data to suit analytics and large-scale aggregations. Refonte Learning’s curriculum often includes case studies on designing BigQuery schemas for different scenarios, giving learners practice in this important skill.

Q4: I’m a beginner in data engineering – is Google Cloud a good place to start, or should I learn on-premise tools first?

A: Starting with Google Cloud (or any cloud) is actually a great idea for beginners. Cloud platforms abstract a lot of the complexity that on-premise tools have. For example, instead of learning to set up Hadoop clusters (which can be quite complex with HDFS, YARN, etc.), you can start with Dataflow which gives you similar processing capability without setup. The cloud allows you to experiment quickly – you can load data into BigQuery and play with SQL without installing anything on your machine beyond a browser.

It’s also aligned with where the industry is headed: most companies are either already in the cloud or migrating. GCP in particular is friendly for data work because of its serverless offerings; you won’t be overwhelmed with managing servers or networks initially – you can focus on data logic. That being said, it’s still useful to understand some basics of how things work under the hood (like what Hadoop or Spark are doing) because it helps in certain design considerations. But you definitely don’t need on-prem experience first. Many successful data engineers today started directly with cloud-based tools. If anything, learning cloud first might save you from picking up some legacy habits. Additionally, Google provides a free tier and lots of documentation and Qwiklabs (hands-on labs) to get started.

Combine those with a structured learning path (for instance, Refonte Learning’s beginner-friendly projects or Google’s own tutorials) and you can build a solid foundation. So dive into GCP – create a small project, break it, learn from it. As you grow, you can always learn the on-premise equivalents if needed, but chances are the jobs you aim for will involve cloud knowledge more than legacy tech.

Q5: How can Refonte Learning help me advance my data engineering career?

A: Refonte Learning provides a structured, hands-on approach to mastering data engineering skills, with an emphasis on cloud technologies like GCP. If you’re someone who learns best by doing, Refonte’s programs can be a game-changer. They offer industry-aligned courses and virtual internships where you work on real-world projects under the guidance of experienced mentors.

For example, in a Refonte Learning GCP course, you might be tasked to design a data pipeline for a hypothetical company – ingesting data, processing it, storing it in BigQuery, and visualizing insights. This kind of project simulates what data engineers do on the job, giving you practical experience you can talk about in interviews. Moreover, Refonte’s mentors (who are industry professionals) provide feedback and support, so you’re not just doing tutorials in isolation; you’re building with accountability and expert advice. They also often help with soft skills like resume building and interview prep, specifically tuned to tech roles.

Another advantage is that Refonte’s curriculum is continuously updated to match industry demands – so if new GCP features or tools become important (say, a new data governance tool or an update to Dataflow), they incorporate that. Essentially, Refonte Learning bridges the gap between theoretical knowledge and real-world application. Many learners find that completing a project with Refonte boosts their confidence because they’ve essentially done the work of a data engineer already during the program. So, whether you need structured learning from scratch or you want to supplement your self-study with real projects, Refonte Learning can accelerate your journey and make you job-ready in the data engineering field.

Plus, networking with mentors and peers in the program can open up opportunities – you never know if someone you meet through Refonte could refer you to a role. In summary, Refonte Learning offers a comprehensive upskilling path: learn the skills, practice them in projects, and get guidance to land that GCP data engineering role in 2025.

Conclusion: Your GCP Data Engineering Journey

Data engineering in 2025 is an exciting field – it sits at the intersection of software engineering, analytics, and cloud computing. Google Cloud Platform provides data engineers with world-class tools to meet modern data challenges, from handling massive datasets in BigQuery to orchestrating intricate pipelines with Composer.

In this article, we covered the essential GCP services and skills you should focus on: mastering BigQuery for data warehousing, Dataflow for building data pipelines, Pub/Sub for real-time messaging, Cloud Storage for data lakes, and Composer for workflow management. We also discussed how the data engineer’s role is evolving – expecting more real-time processing, integration with AI, and strong DevOps practices – and how you can build a roadmap to acquire those skills.

Remember that becoming a proficient data engineer is a journey. Start with core concepts and incrementally tackle more complex projects. Make use of the wealth of resources available: GCP’s free tier, online documentation, community forums, and structured programs. Speaking of which, if you want a guided path, consider leveraging programs like Refonte Learning’s GCP data engineering course, where you get hands-on with these technologies in a curated way. By practicing on real projects and possibly earning relevant certifications, you’ll build both skill and confidence.

The demand for skilled data engineers is only growing, and with the right GCP expertise, you’ll be well-positioned to advance your career. Whether you aim to become a senior data engineer, a data architect, or transition into adjacent roles like ML engineer, the foundation you lay now with GCP will pay dividends. So take the next step – set up that GCP project, query that dataset, script that pipeline. Every bit of practice brings you closer to your career goals.

Call to Action: Ready to elevate your data engineering career with Google Cloud skills? Dive into learning by doing. If you need guidance, Refonte Learning offers industry-aligned GCP training and virtual internships. Under expert mentorship, you’ll work on real projects – designing pipelines, solving data problems, and building a portfolio that showcases your abilities. By joining Refonte Learning, you accelerate your journey and connect with a community of like-minded professionals. Don’t wait for the future – start building the Google Cloud skills for 2025 today and secure your place in the next generation of data engineering leaders.

Refonte Learning – empowering the next wave of cloud-savvy data engineers through hands-on learning and practical experience.